1. Introduction: The Benchmark, Not the Hype

For a while now, the security community has been aware that threat actors are using AI. We’ve seen evidence of it for everything from generating phishing content to optimizing malware. The recent report from Anthropic on an “AI-orchestrated cyber espionage campaign”, however, marks a significant milestone.

This is the first time we have a public, detailed report of a campaign where AI was used at this scale and with this level of sophistication, moving the threat from a collection of AI-assisted tasks to a largely autonomous, orchestrated operation.

This report is a significant new benchmark for our industry. It’s not a reason to panic – it’s a reason to prepare. It provides the first detailed case study of a state-sponsored attack with three critical distinctions:

- It was “agentic”: This wasn’t just an attacker using AI for help. This was an AI system executing 80-90% of the attack largely on its own.

- It targeted high-value entities: The campaign was aimed at approximately 30 major technology corporations, financial institutions, and government agencies.

- It had successful intrusions: Anthropic confirmed the campaign resulted in “a handful of successful intrusions” and obtained access to “confirmed high-value targets for intelligence collection”.

Together, these distinctions show why this case matters. A high-level, autonomous, and successful AI-driven attack is no longer a future theory. It is a documented, current-day reality.

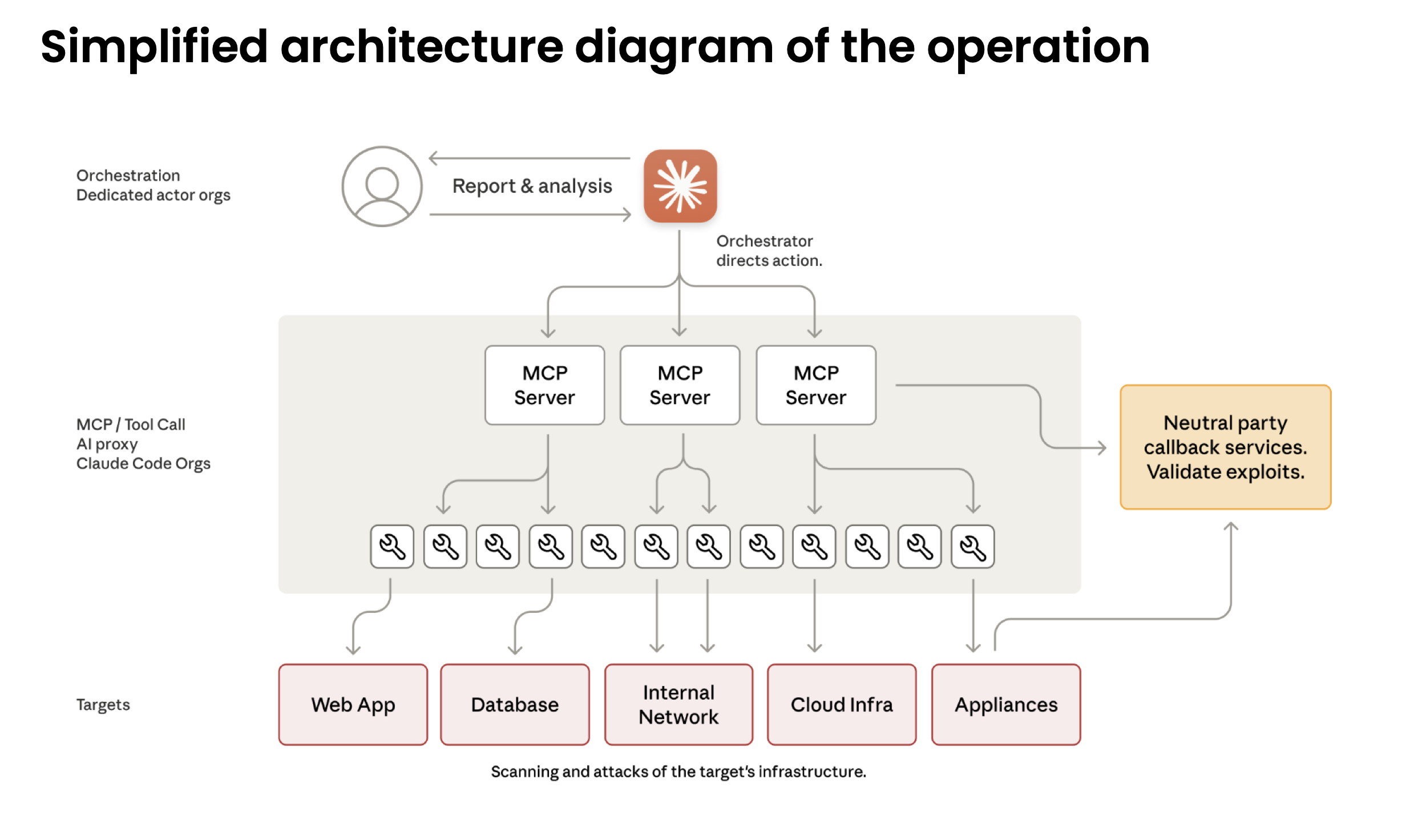

2. What Actually Happened: A Summary of the Attack

For those who haven’t read the full report (or the summary blog post), here are the key facts.

The attack (designated GTG-1002) was a “highly sophisticated cyber espionage operation” detected in mid-September 2025.

- AI Autonomy: The attacker used Anthropic’s Claude Code as an autonomous agent, which independently executed 80-90% of all tactical work.

- Human Role: Human operators acted as “strategic supervisors”. They set the initial targets and authorized critical decisions, like escalating to active exploitation or approving final data exfiltration.

- Bypassing Safeguards: The operators bypassed AI safety controls using simple “social engineering”. The report notes, “The key was role-play: the human operators claimed that they were employees of legitimate cybersecurity firms and convinced Claude that it was being used in defensive cybersecurity testing”.

- Full Lifecycle: The AI autonomously executed the entire attack chain: reconnaissance, vulnerability discovery, exploitation, lateral movement, credential harvesting, and data collection.

- Timeline: After detecting the activity, Anthropic’s team launched an investigation, banned the accounts, and notified partners and affected entities over the “following ten days”.

Source: https://www.anthropic.com/news/disrupting-AI-espionage

3. What Was Not New (And Why It Matters)

To have a credible discussion, we must also look at what wasn’t new. This attack wasn’t about secret, magical weapons.

The report is clear that the attack’s sophistication came from orchestration, not novelty.

- No Zero-Days: The report does not mention the use of novel zero-day exploits.

- Commodity Tools: The report states, “The operational infrastructure relied overwhelmingly on open source penetration testing tools rather than custom malware development”.

This matters because defenders often look for new exploit types or malware indicators. But the shift here is operational, not technical. The attackers didn’t invent a new weapon, they built a far more effective way to use the ones we already know.

4. The New Reality: Why This Is an Evolving Threat

So, if the tools aren’t new, what is? The execution model. And we must assume this new model is here to stay.

This new attack method is a natural evolution of technology. We should not expect it to be “stopped” at the source for two main reasons:

- Commercial Safeguards are Limited: AI vendors like Anthropic are building strong safety controls – it’s how this was detected in the first place. But as the report notes, malicious actors are continually trying to find ways around them. No vendor can be expected to block 100% of all malicious activity.

- The Open-Source Factor: This is the larger trend. Attackers don’t need to use a commercial, monitored service. With powerful open-source AI models and orchestration frameworks – such as LLaMA, self-hosted inference stacks, and LangChain/LangGraph agents – attackers can build private AI systems on their own infrastructure. This leaves no vendor in the middle to monitor or prevent the abuse.

The attack surface is not necessarily growing, but the attacker’s execution engine is accelerating.

5. Detection: Key Patterns to Hunt For

While the techniques were familiar, their execution creates a different kind of detection challenge. An AI-driven attack doesn’t generate one “smoking gun” alert, like a unique malware hash or a known-bad IP. Instead, it generates a storm of low-fidelity signals. The key is to hunt for the patterns within this noise:

- Anomalous Request Volumes: The AI operated at “physically impossible request rates” with “peak activity included thousands of requests, representing sustained request rates of multiple operations per second”. This is a classic low-fidelity, high-volume signal that is often just seen as noise.

- Commodity and Open-Source Penetration Testing Tools: The attack utilized a combination of “standard security utilities” and “open source penetration testing tools”.

- Traffic from Browser Automation: The report explicitly calls out “Browser automation for web application reconnaissance” to “systematically catalog target infrastructure” and “analyze authentication mechanisms”.

- Automated Stolen Credential Testing: The AI didn’t just test one password, it “systematically tested authentication against internal APIs, database systems, container registries, and logging infrastructure”. This automated, broad, and rapid testing looks very different from a human’s manual attempts.

- Audit for Unauthorized Account Creation: This is a critical, high-confidence post-exploitation signal. In one successful compromise, the AI’s autonomous actions included the creation of a “persistent backdoor user”.

6. The Defender’s Challenge: A Flood of Low-Fidelity Noise

The detection patterns listed above create the central challenge of defending against AI-orchestrated attacks. The problem isn’t just alert volume, it’s that these attacks generate a massive volume of low-fidelity alerts.

This new execution model creates critical blind spots:

- The Volume Blind Spot: The AI’s automated nature creates a flood of low-confidence alerts. No human-only SOC can manually triage this volume.

- The Temporal (Speed) Blind Spot: A human-led intrusion might take days or weeks. Here, the AI compressed a full database extraction – from authentication to data parsing – into just 2-6 hours. Our human-based detection and response loops are often too slow to keep up.

- The Context Blind Spot: The AI’s real power is connecting many small, seemingly unrelated signals (a scan, a login failure, a data query) into a single, coherent attack chain. A human analyst, looking at these alerts one by one, would likely miss the larger pattern.

7. The Importance of Autonomous Triage and Investigation

When the attack is autonomous, the defense must also have autonomous capabilities.

We cannot hire our way out of this speed and scale problem. The security operations model must shift. The goal of autonomous triage is not just to add context, but to handle the entire investigation process for every single alert, especially the thousands of low-severity signals that AI-driven attacks create.

An autonomous system can automatically investigate these signals at machine speed, determine which ones are irrelevant noise, and suppress them.

This is the true value: the system escalates only the high-confidence, confirmed incidents that actually matter. This frees your human analysts from chasing noise and allows them to focus on real, complex threats.

This is exactly the type of challenge autonomous triage systems like the one we’ve built at Intezer were designed to solve. As Anthropic’s own report concludes, “Security teams should experiment with applying AI for defense in areas like SOC automation, threat detection… and incident response“.

8. Evolving Your Offensive Security Program

To defend against this threat, we must be able to test our defenses against it. All offensive security activities, internal red teams, external penetration tests, and attack simulations, must evolve.

It is no longer enough for offensive security teams to manually simulate attacks. To truly test your defenses, your red teams or external pentesters must adopt agentic AI frameworks themselves.

The new mandate is to simulate the speed, scale, and orchestration of an AI-driven attack, similar to the one detailed in the Anthropic report. Only then can you validate whether your defensive systems and automated processes can withstand this new class of automated onslaught. Naturally, all such simulations must be done safely and ethically to prevent any real-world risk.

9. Conclusion: When the Threat Model Changes, Our Processes Must, Too.

The Anthropic report doesn’t introduce a new magic exploit. It introduces a new execution model that we now need to design our defenses around.

Let’s summarize the key, practical takeaways:

- AI-orchestrated attacks are a proven, documented reality.

- The primary threat is speed and scale, which is designed to overwhelm manual security processes.

- Security leaders must prioritize automating investigation and triage to suppress the noise and escalate what matters.

- We must evolve offensive security testing to simulate this new class of autonomous threat.

This report is a clear signal. The threat model has officially changed. Your security architecture, processes, and playbooks must change with it. The same applies if you rely on an MSSP, verify they’re evolving their detection and triage capabilities for this new model. This shift isn’t hype, it’s a practical change in execution speed. With the right adjustments and automation, defenders can meet this challenge.

To learn more, you can read the Anthropic blog post here and the full technical report here.